Llewellyn King: Productivity will surge with AI; how will politicians react?

WEST WARWICK, R.I.

There is every chance that the world’s industrial economies may be about to enjoy an incredible surge in productivity, something like the arrival of steam power in the 18th Century.

The driver of this will be artificial intelligence. Gradually, it will seep into every aspect of our working and living, pushing up the amount produced by individual workers and leading to general economic growth.

The downside is that jobs will be eliminated, probably mostly, and historically for the first time, white-collar jobs. Put simply, office workers are going to find themselves seeking other work, maybe work that is much more physical, in everything from hospitality to health care to the trades.

I have canvassed many super-thinkers on AI, and they believe in unison that its impact will be seminal, game-changing, never to be switched back. Most are excited and see a better, healthier, more prosperous future, justifying the upheaval.

Omar Hatamleh, chief AI officer at the National Aeronautics and Space Administration, and author of two books on AI and a third in preparation, misses no opportunity to emphasize that thinking about AI needs to be exponential not linear. Sadly, linear thinking is what we human beings tend to do. To my mind, Hatamleh is in the vanguard of AI thinkers,

The United States is likely to be the major beneficiary of the early waves of AI adoption and its productivity surge if we don’t try to impede the technology’s evolution with premature regulation or controls.

Economies which are sclerotic, as is much of Europe, can look to AI to get them back into growth, especially the former big drivers of growth in Europe such as Germany, France and Britain, all of which are scratching their heads as to how to boost their productivity, and, hence, their prosperity.

The danger in Europe is that they will try to regulate AI prematurely and that their trades unions will resist reform of their job markets. That would leave China and the United States to duke it out for dominance of AI technology and to benefit from its boost to efficiency and productivity, and, for example, to medical research, leading to breakthroughs in longevity.

Some of the early fear of Frankenstein science has abated as early AI is being seamlessly introduced in everything from weather forecasting to wildfire control and customer relations.

Salesforce, a leading software company that has traditionally focused on customer-relations management, explains its role as connecting the dots by “layering in” AI. A visit to its website is enlightening. Salesforce has available or is developing “agents,” which are systems that operate on behalf of its customers.

If you want to know how your industry is likely to be affected, take a look at how much data it generates. If it generates scads of data — weather forecasting, electric utilities, healthcare, retailing and airlines — AI is either already making inroads or brace for its arrival.

For society, the big challenge of AI isn’t going to be just the reshuffling of the workforce, but what is truth? This is not a casual question, and it should be at the forefront of wondering how to develop ways of identifying the origin of AI-generated information — data, pictures and sounds.

One way is watermarking, and it deserves all the support it can get from those who are leading the AI revolution –the big tech giants and the small startups that feed into their technology. It begs for study in the government’s many centers of research, including the Defense Advanced Research Projects Agency and the great national laboratories.

Extraordinarily, as the election bears down on us, there is almost no recognition in the political parties, and the political class as a whole, that we are on the threshold of a revolution. AI is a disruptive technology that holds promise for fabulous medicine, great science and huge productivity gains.

A new epoch is at hand, and it has nothing to do with the political issues of the day.

Llewellyn King is executive producer and host of White House Chronicle, on PBS. His email is llewellynking1@gmail.com, and he’s based in Rhode Island.

Llewellyn King: Coming soon — AI travel agents instead of human ones

Your agents? The word "robot" was coined by Karel Čapek in his 1921 play R.U.R. — standing for "Rossum's Universal Robots".

WEST WARWICK, R.I.

The next big wave in innovation in artificial intelligence is at hand: agents.

With agents, the usefulness of AI will increase exponentially and enable businesses and governments to streamline their operations while making them more dependable, efficient and adaptable to circumstance, according to Satya Nitta, co-founder and CEO of Emergence, the futuristic New York-based computer company.

These are the first AI systems that can both speak with humans and each other conversationally, which may reduce some of the anxiety people feel about AI — this unseen force that is set to transform our world. These agents use AI to perceive their environment, make decisions, take actions and achieve goals autonomously, Nitta said.

The term “situational awareness” could have been created for agents because that is the key to their effectiveness.

For example, an autonomous vehicle needs a lot of awareness to be safe and operate effectively. It needs every bit of real-time knowledge that a human driver needs on the roadway, including scanning traffic on all sides of the vehicle, looking out for an approaching emergency vehicle or a child who might dash into the road, or sensing a drunk driver.

Emergence is a well-funded startup, aiming to help big companies and governments by designing and deploying agents for their most complex operations.

It is perhaps easier to see how an agent might work for an individual and then extrapolate that for a large system, Nitta suggested.

Take a family vacation. If you were using an agent to manage your vacation, it would have to have been fed some of your preferences and be able to develop others itself. With these to the fore, the agent would book your trip, or as much of it as you wished to hand to the agent.

The agent would know your travel budget, your hotel preferences and the kinds of amusements that would be of interest to your family. It would do some deductive reasoning that would allow for what you could afford and balance that with what is available. You could discuss your itinerary with the agent as though it were a travel consultant.

Nitta and Emergence are designing agents to manage the needs of organizations, such as electric utilities and their grids, and government departments, such as education and health care. Emergence, along with several other AI companies and researchers, has signed a pledge not to work on AI for military applications, Nitta said.

Talking about agents that would be built on open-source Large Language Models and Large Vision Models, Nitta said, “Agents are building blocks which can communicate with each other and with humans in natural language, can control tools and can perform actions in the digital or the physical world.”

Nitta explained further, “Agents have some functional capacity. To plan, reason and remember. They are the foundations upon which scalable, intelligent systems can be built. Such systems, composed of one or more agents, can profoundly reshape our ideas of what computers can do for humanity.”

This prospect is what inspired the creation of Emergence and caused private investors to plow $100 million in equity funding into the venture, and lenders to pledge lines of credit of another $30 million.

Part of the appeal of Emergence’s agents is that they will be voice-directed and you can talk to them as you would to a fellow worker or employee, to reason with them, perhaps.

Nitta said that historically there have been barriers to the emergence of voice fully interfacing with computing. And, he said, there has been an inability of computers to perform more than one assignment at a time. Agents will overcome these blockages.

Nitta’s agents will do such enormously complex things as scheduling the inputs into an electricity grid from multiple small generators or calculating weather, currents and the endurance of fishing boats and historical fish migration patterns to help fishermen.

At the same time, they will be adjusting to changes in their environment, say, for the grid, a windstorm, or the fish are turning south not east, as expected, or if the wholesale price of fish has dropped to change the economics of the endeavor.

To laymen, to those who have been awed by the seeming impregnable world of AI, Emergence and its agent systems is reassuring because you will be able to talk to the agents, quite possibly in colloquial English or any other language.

I feel better about AI already — AI will speak English if Nitta and his polymaths are right. AI, we should talk.

Llewellyn King is executive producer and host of White House Chronicle, on PBS. His email is llewellynking1@gmail.com and he’s based in Rhode Island.

Ayse Coskun: AI strains data centers

Google Data Center, The Dalles, Ore.

— Photo by Visitor7

BOSTON

The artificial-intelligence boom has had such a profound effect on big tech companies that their energy consumption, and with it their carbon emissions, have surged.

The spectacular success of large language models such as ChatGPT has helped fuel this growth in energy demand. At 2.9 watt-hours per ChatGPT request, AI queries require about 10 times the electricity of traditional Google queries, according to the Electric Power Research Institute, a nonprofit research firm. Emerging AI capabilities such as audio and video generation are likely to add to this energy demand.

The energy needs of AI are shifting the calculus of energy companies. They’re now exploring previously untenable options, such as restarting a nuclear reactor at the Three Mile Island power plant, site of the infamous disaster in 1979, that has been dormant since 2019.

Data centers have had continuous growth for decades, but the magnitude of growth in the still-young era of large language models has been exceptional. AI requires a lot more computational and data storage resources than the pre-AI rate of data center growth could provide.

AI and the grid

Thanks to AI, the electrical grid – in many places already near its capacity or prone to stability challenges – is experiencing more pressure than before. There is also a substantial lag between computing growth and grid growth. Data centers take one to two years to build, while adding new power to the grid requires over four years.

As a recent report from the Electric Power Research Institute lays out, just 15 states contain 80% of the data centers in the U.S.. Some states – such as Virginia, home to Data Center Alley – astonishingly have over 25% of their electricity consumed by data centers. There are similar trends of clustered data center growth in other parts of the world. For example, Ireland has become a data center nation.

AI is having a big impact on the electrical grid and, potentially, the climate.

Along with the need to add more power generation to sustain this growth, nearly all countries have decarbonization goals. This means they are striving to integrate more renewable energy sources into the grid. Renewables such as wind and solar are intermittent: The wind doesn’t always blow and the sun doesn’t always shine. The dearth of cheap, green and scalable energy storage means the grid faces an even bigger problem matching supply with demand.

Additional challenges to data center growth include increasing use of water cooling for efficiency, which strains limited fresh water sources. As a result, some communities are pushing back against new data center investments.

Better tech

There are several ways the industry is addressing this energy crisis. First, computing hardware has gotten substantially more energy efficient over the years in terms of the operations executed per watt consumed. Data centers’ power use efficiency, a metric that shows the ratio of power consumed for computing versus for cooling and other infrastructure, has been reduced to 1.5 on average, and even to an impressive 1.2 in advanced facilities. New data centers have more efficient cooling by using water cooling and external cool air when it’s available.

Unfortunately, efficiency alone is not going to solve the sustainability problem. In fact, Jevons paradox points to how efficiency may result in an increase of energy consumption in the longer run. In addition, hardware efficiency gains have slowed down substantially, as the industry has hit the limits of chip technology scaling.

To continue improving efficiency, researchers are designing specialized hardware such as accelerators, new integration technologies such as 3D chips, and new chip cooling techniques.

Similarly, researchers are increasingly studying and developing data center cooling technologies. The Electric Power Research Institute report endorses new cooling methods, such as air-assisted liquid cooling and immersion cooling. While liquid cooling has already made its way into data centers, only a few new data centers have implemented the still-in-development immersion cooling.

Running computer servers in a liquid – rather than in air – could be a more efficient way to cool them. Craig Fritz, Sandia National Laboratories

Flexible future

A new way of building AI data centers is flexible computing, where the key idea is to compute more when electricity is cheaper, more available and greener, and less when it’s more expensive, scarce and polluting.

Data center operators can convert their facilities to be a flexible load on the grid. Academia and industry have provided early examples of data center demand response, where data centers regulate their power depending on power grid needs. For example, they can schedule certain computing tasks for off-peak hours.

Implementing broader and larger scale flexibility in power consumption requires innovation in hardware, software and grid-data center coordination. Especially for AI, there is much room to develop new strategies to tune data centers’ computational loads and therefore energy consumption. For example, data centers can scale back accuracy to reduce workloads when training AI models.

Realizing this vision requires better modeling and forecasting. Data centers can try to better understand and predict their loads and conditions. It’s also important to predict the grid load and growth.

The Electric Power Research Institute’s load forecasting initiative involves activities to help with grid planning and operations. Comprehensive monitoring and intelligent analytics – possibly relying on AI – for both data centers and the grid are essential for accurate forecasting.

On the edge

The U.S. is at a critical juncture with the explosive growth of AI. It is immensely difficult to integrate hundreds of megawatts of electricity demand into already strained grids. It might be time to rethink how the industry builds data centers.

One possibility is to sustainably build more edge data centers – smaller, widely distributed facilities – to bring computing to local communities. Edge data centers can also reliably add computing power to dense, urban regions without further stressing the grid. While these smaller centers currently make up 10% of data centers in the U.S., analysts project the market for smaller-scale edge data centers to grow by over 20% in the next five years.

Along with converting data centers into flexible and controllable loads, innovating in the edge data center space may make AI’s energy demands much more sustainable.

Ayse Coskun is a professor of electrical and computer engineering at .,Boston University

Disclosure statement

Ayse K. Coskun has recently received research funding from the National Science Foundation, the Department of Energy, IBM Research, Boston University Red Hat Collaboratory, and the Research Council of Norway. None of the recent funding is directly linked to this article.

Llewellyn King: The wild and fabulous medical frontier with predictive AI

WEST WARWICK, R.I.

When is a workplace at its happiest? I would submit that it is during the early stages of a project that is succeeding, whether it is a restaurant, an Internet startup or a laboratory that is making phenomenal progress in its field of inquiry.

There is a sustained ebullience in a lab when the researchers know that they are pushing back the frontiers of science, opening vistas of human possibility and reaping the extraordinary rewards that accompany just learning something big. There has been a special euphoria in science ever since Archimedes jumped out of his bath in ancient Greece, supposedly shouting, “Eureka!”

I had a sense of this excitement when interviewing two exceptional scientists, Marina Sirota and Alice Tang, at the University of California San Francisco (UCSF), for the independent PBS television program White House Chronicle.

Sirota and Tang have published a seminal paper on the early detection of Alzheimer’s Disease — as much as 10 years before onset — with machine learning and artificial intelligence. The researchers were hugely excited by their findings and what their line of research will do for the early detection and avoidance of complex diseases, such as Alzheimer’s and many more.

It excited me — as someone who has been worried about the impact of AI on everything, from the integrity of elections to the loss of jobs — because the research at UCSF offers a clear example of the strides in medicine that are unfolding through computational science. “This time it’s different,” said Omar Hatamleh, who heads up AI for NASA at the Goddard Space Flight Center, in Greenbelt, Md.

In laboratories such as the one in San Francisco, human expectations are being revolutionized.

Sirota said, “At my lab …. the idea is to use both molecular data and clinical data [which is what you generate when you visit your doctor] and apply machine learning and artificial intelligence.”

Tang, who has just finished her PhD and is studying to be a medical doctor, explained, “It is the combination of diseases that allows our model to predict onset.”

In their study, Sirota and Tang found that osteoporosis is predictive of Alzheimer’s in women, highlighting the interplay between bone health and dementia risk.

The UCSF researchers used this approach to find predictive patterns from 5 million clinical patient records held by the university in its database. From these, there emerged a relationship between osteoporosis and Alzheimer’s, especially in women. This is important as two-thirds of Alzheimer’s sufferers are women.

The researchers cautioned that it isn’t axiomatic that osteoporosis leads to Alzheimer’s, but it is true in about 70 percent of cases. Also, they said they are critically aware of historical bias in available data — for example, that most of it is from white people in a particular social-economic class.

There are, Sirota and Tang said, contributory factors they found in Alzheimer’s. These include hypertension, vitamin D deficiency and heightened cholesterol. In men, erectile dysfunction and enlarged prostate are also predictive. These findings were published in “Nature Aging” early this year.

Predictive analysis has potential applications for many diseases. It will be possible to detect them well in advance of onset and, therefore, to develop therapies.

This kind of predictive analysis has been used to anticipate homelessness so that intervention – like rent assistance — can be applied before a family is thrown out on the street. Institutional charity is normally slow and often identifies at-risk people after a catastrophe has occurred.

AI is beginning to influence many aspects of the way we live, from telephoning a banker to utilities’ efforts to spot and control at-risk vegetation before a spark ignites a wildfire.

While the challenges of AI, from its wrongful use by authoritarian rulers and its menace in war and social control, are real, the uses just in medicine are awesome. In medicine, it is the beginning of a new time in human health, as the frontiers of disease are understood and pushed back as never before. Eureka! Eureka! Eureka!

Llewellyn King is executive producer and host of White House Chronicle, on PBS. His email is llewellynking1@gmail.com, and he’s based in Rhode Island.

Dr. Yukie Nagai's predictive learning architecture for predicting sensorimotor signals.

— Dr. Yukie Nagai - https://royalsocietypublishing.org/doi/10.1098/rstb.2018.0030

Ryan McGrady/Ethan Zuckerman: Your personal and family videos are stuff for AI and so a privacy risk

From The Conversation

AMHERST, Mass.

The promised artificial intelligence revolution requires data. Lots and lots of data. OpenAI and Google have begun using YouTube videos to train their text-based AI models. But what does the YouTube archive actually include?

Our team of digital media researchers at the University of Massachusetts Amherst collected and analyzed random samples of YouTube videos to learn more about that archive. We published an 85-page paper about that dataset and set up a website called TubeStats for researchers and journalists who need basic information about YouTube.

Now, we’re taking a closer look at some of our more surprising findings to better understand how these obscure videos might become part of powerful AI systems. We’ve found that many YouTube videos are meant for personal use or for small groups of people, and a significant proportion were created by children who appear to be under 13.

Most people’s experience of YouTube is algorithmically curated: Up to 70% of the videos users watch are recommended by the site’s algorithms. Recommended videos are typically popular content such as influencer stunts, news clips, explainer videos, travel vlogs and video game reviews, while content that is not recommended languishes in obscurity.

Some YouTube content emulates popular creators or fits into established genres, but much of it is personal: family celebrations, selfies set to music, homework assignments, video game clips without context and kids dancing. The obscure side of YouTube – the vast majority of the estimated 14.8 billion videos created and uploaded to the platform – is poorly understood.

Illuminating this aspect of YouTube – and social media generally – is difficult because big tech companies have become increasingly hostile to researchers.

We’ve found that many videos on YouTube were never meant to be shared widely. We documented thousands of short, personal videos that have few views but high engagement – likes and comments – implying a small but highly engaged audience. These were clearly meant for a small audience of friends and family. Such social uses of YouTube contrast with videos that try to maximize their audience, suggesting another way to use YouTube: as a video-centered social network for small groups.

Other videos seem intended for a different kind of small, fixed audience: recorded classes from pandemic-era virtual instruction, school board meetings and work meetings. While not what most people think of as social uses, they likewise imply that their creators have a different expectation about the audience for the videos than creators of the kind of content people see in their recommendations.

Fuel for the AI Machine

It was with this broader understanding that we read The New York Times exposé on how OpenAI and Google turned to YouTube in a race to find new troves of data to train their large language models. An archive of YouTube transcripts makes an extraordinary dataset for text-based models.

There is also speculation, fueled in part by an evasive answer from OpenAI’s chief technology officer Mira Murati, that the videos themselves could be used to train AI text-to-video models such as OpenAI’s Sora.

The New York Times story raised concerns about YouTube’s terms of service and, of course, the copyright issues that pervade much of the debate about AI. But there’s another problem: How could anyone know what an archive of more than 14 billion videos, uploaded by people all over the world, actually contains? It’s not entirely clear that Google knows or even could know if it wanted to.

Kids as Content Creators

We were surprised to find an unsettling number of videos featuring kids or apparently created by them. YouTube requires uploaders to be at least 13 years old, but we frequently saw children who appeared to be much younger than that, typically dancing, singing or playing video games.

In our preliminary research, our coders determined nearly a fifth of random videos with at least one person’s face visible likely included someone under 13. We didn’t take into account videos that were clearly shot with the consent of a parent or guardian.

Our current sample size of 250 is relatively small – we are working on coding a much larger sample – but the findings thus far are consistent with what we’ve seen in the past. We’re not aiming to scold Google. Age validation on the internet is infamously difficult and fraught, and we have no way of determining whether these videos were uploaded with the consent of a parent or guardian. But we want to underscore what is being ingested by these large companies’ AI models.

Small Reach, Big Influence

It’s tempting to assume OpenAI is using highly produced influencer videos or TV newscasts posted to the platform to train its models, but previous research on large language model training data shows that the most popular content is not always the most influential in training AI models. A virtually unwatched conversation between three friends could have much more linguistic value in training a chatbot language model than a music video with millions of views.

Unfortunately, OpenAI and other AI companies are quite opaque about their training materials: They don’t specify what goes in and what doesn’t. Most of the time, researchers can infer problems with training data through biases in AI systems’ output. But when we do get a glimpse at training data, there’s often cause for concern. For example, Human Rights Watch released a report on June 10, 2024, that showed that a popular training dataset includes many photos of identifiable kids.

The history of big tech self-regulation is filled with moving goal posts. OpenAI in particular is notorious for asking for forgiveness rather than permission and has faced increasing criticism for putting profit over safety.

Concerns over the use of user-generated content for training AI models typically center on intellectual property, but there are also privacy issues. YouTube is a vast, unwieldy archive, impossible to fully review.

Models trained on a subset of professionally produced videos could conceivably be an AI company’s first training corpus. But without strong policies in place, any company that ingests more than the popular tip of the iceberg is likely including content that violates the Federal Trade Commission’s Children’s Online Privacy Protection Rule, which prevents companies from collecting data from children under 13 without notice.

With last year’s executive order on AI and at least one promising proposal on the table for comprehensive privacy legislation, there are signs that legal protections for user data in the U.S. might become more robust.

When the Wall Street Journal’s Joanna Stern asked OpenAI CTO Mira Murati whether OpenAI trained its text-to-video generator Sora on YouTube videos, she said she wasn’t sure.

Have You Unwittingly Helped Train ChatGPT?

The intentions of a YouTube uploader simply aren’t as consistent or predictable as those of someone publishing a book, writing an article for a magazine or displaying a painting in a gallery. But even if YouTube’s algorithm ignores your upload and it never gets more than a couple of views, it may be used to train models like ChatGPT and Gemini.

As far as AI is concerned, your family reunion video may be just as important as those uploaded by influencer giant Mr. Beast or CNN.

Ryan McGrady is a senior researcher, Initiative for Digital Public Infrastructure at the University of Massachusetts at Amherst.

Ethan Zuckerman is an associate professor of public policy, communication and Information at UMass Amherst.

Disclosure statement

Ethan Zuckerman says: “My work - and the work we refer to in this article - is supported by the MacArthur Foundation, the Ford Foundation, the Knight Foundation and the National Science Foundation. I am on the board of several nonprofit organizations, including Global Voices, but none are directly connected to politics.’’

Ryan McGrady does not work for, consult, own shares in or receive funding from any company or organization that would benefit from this article, and has disclosed no relevant affiliations.

Getting into AI ‘black boxes’

Edited from a New England Council report

“Northeastern University, in Boston, has been granted $9 million to study how advanced artificial intelligence systems operate and their societal impacts. The grant, announced by the National Science Foundation (NSF), will let Northeastern to create a collaborative research platform.

“This platform aims to let researchers across the U.S. examine the internal computation systems of advanced AI, which are currently opaque because of their ‘black box’ nature.

“NSF Director Sethuraman Panchanathan said: “Chatbots have transformed society’s relationship with AI. However, the inner workings of these systems are not yet fully understood.’

“The research will focus on large language models, such as ChatGPT and Google’s Gemini. These models will be used alongside public-interest tech groups to ensure that AI advancements adhere to ethical and social standards.’’

Northeastern University's EXP research building.

Llewellyn King: Making movies with the dead as AI hammers the truth AND improves (at least physical) health

Depiction of a homunculus from Wolfgang von Goethe's (1749-1832) Faust in a 19th Century engraving.

Popularized in 16th-Century alchemy and 19th Century fiction, it has historically referred to the creation of a miniature fully formed human.

WEST WARWICK, R.I.

Advanced countries can expect a huge boost in productivity from artificial intelligence. In my view, it will set the stage for a new period of prosperity in the developed world — especially in the United States.

Medicine will take off as never before. Life expectancy will rise by a third.

The obverse may be that jobs will be severely affected by AI, especially in the service industries, ushering in a time of huge labor adjustment.

The danger is that we will take it as the next step in automation. It won’t. Automation increased productivity. But, creating new goods dictates new labor needs.

So far, it appears that with AI, more goods will be made by fewer people, telephones answered by ghosts and orders taken by unseen digits.

Another serious downside will be the effect on truth, knowledge and information; on what we know and what we think we know.

In the early years of the wide availability of artificial intelligence, truth will be struggling against a sea of disinformation, propaganda and lies — lies buttressed with believable fake evidence.

As Stuart Russell, a professor of computer science at the University of California at Berkeley, told me when I interviewed him on the television program White House Chronicle, the danger is with “language in, language out.”

That succinctly sums up the threat to our well-being and stability posed by the ability to use AI to create information chaos.

At present, two ugly wars are raging and, as is the way with wars, both sides are claiming huge excesses from the other. No doubt there is truth to both claims.

But what happens when you add the ability of AI to produce fake evidence, say, huge piles of bodies that never existed? Or of children under torture?

AI, I am assured, can produce a believable image of Winston Churchill secretly meeting with Hitler, laughing together.

Establishing veracity is the central purpose of criminal justice. But with AI, a concocted video of a suspect committing a crime can be created or a home movie of a suspect far away on a beach when, in fact, the perpetrator was elsewhere, choking a victim to death.

Divorce is going to be a big arena for AI dishonesty. It is quite easy to make a film of a spouse in an adulterous situation when that never happened.

Intellectual property is about to find itself under the wheels of the AI bus. How do you trace its filching? Where do you seek redress?

Is there any safe place for creative people? How about a highly readable novel with Stephen King’s characters and a new plot? Where would King find justice? How would the reader know he or she was reading a counterfeit work?

Within a few months or years or right now, a new movie could be made featuring Marilyn Monroe and, say, George Clooney.

Taylor Swift is the hottest ticket of the time, maybe all time, but AI crooks could use her innumerable public images and voice to issue a new video or album in which she took no part and doesn’t know exists.

Here is the question: If you think it is an AI-created work, should you enjoy it? I am fond of Judy Garland singing “The Man That Got Away.” What if I find on the Internet what purports to be Taylor Swift singing it? I know it is a forgery by AI, but I love that rendering. Should I enjoy it, and if I do, will I be party to a crime? Will I be an enabler of criminal conduct?

AI will facilitate plagiarism on an industrial scale, pervasive and uncontrollable. You might, in a few short years, be enjoying a new movie starring Ingrid Bergman and Humphrey Bogart. The AI technology is there to make such a movie and it might be as enjoyable as Casablanca. But it will be faked, deeply faked.

Already, truth in politics is fragile, if not broken. A plethora of commentators spews out half-truths and lies that distort the political debate and take in the gullible or just those who want to believe.

If you want to believe something, AI will oblige, whether it is about a candidate or a divinity. You can already dial up Jesus and speak to an AI-generated voice purporting to be him.

Overall, AI will be of incalculable benefit to humans. While it will stimulate dreaming as never before, it will also trigger nightmares.

On Twitter: @llewellynking2

Llewellyn King is executive producer and host of White House Chronicle, on PBS. He’s based in Rhode Island and Washington, D.C.

Llewellyn King: When will Trump and Biden talk about the looming tsunami of AI?

“Artificial intelligence” got its name and was started as a discipline at a workshop at Dartmouth College, in Hanover, N.H., in the summer of 1956. From left to right, some key participants sitting in front of Dartmouth Hall: Oliver Selfridge, Nathaniel Rochester, Ray Solomonoff, Marvin Minsky, Trenchard More, John McCarthy and Claude Shannon.

WEST WARWICK, R.I.

Memo to presidential candidates Joe Biden and Donald Trump:

Assuming that one of you will be elected president of the United States next November, many computer scientists believe that you should be addressing what you think about artificial intelligence and how you plan to deal with the surge in this technology, which will break over the nation in the next president’s term.

Gentlemen, this matter is urgent, yet not much has been heard on the subject from either of you who are seeking the highest office. President Biden did sign a first attempt at guidelines for AI, but he and Trump have been quiet on its transformative impact.

Indeed, the political class has been silent, preoccupied as it is with old and – against what is going to happen — irrelevant issues. Congress has been as silent as Biden and Trump. There are two congressional AI caucuses, but they have been concerned with minor issues, like AI in political advertising.

Two issues stand out as game changers in the next presidential term: climate change and AI.

On climate change, both of you have spoken: Biden has made climate change his own; Trump has dismissed it as a hoax.

The AI tsunami is rolling in and the political class is at play, unaware that it is about to be swamped by a huge new reality: exponential change which can neither be stopped nor legislated into benignity.

Before the next presidential term is far advanced, the experts tell us that the life of the nation will be changed, perhaps upended by the surge in AI, which will reach into every aspect of how we live and work.

I have surveyed the leading experts in universities, government and AI companies and they tell me that any form of employment that uses language will be changed. Just this will be an enormous upset, reaching from journalism (where AI already has had an impact) to the law (where AI is doing routine drafting) to customer service (where AI is going to take over call centers) to fast food (where AI will take the orders).

The more one thinks about AI, the more activities come to mind which will be severely affected by its neural networks.

Canvas the departments and agencies of the government and you will learn the transformational nature of AI. In the departments of Defense, Treasury and Homeland Security, AI is seen as a serious agent of change — even revolution.

The main thing is not to confuse AI with automation. It may resemble it and many may take refuge in the benefits brought about by automation, especially job creation. But AI is different. Rather than job creation, it appears, at least in its early iterations, set to do major job obliteration.

But there is good AI news, too. And those in the political line of work can use good news, whetting the appetite of the nation with the advances that are around the corner with AI.

Many aspects of medicine will, without doubt, rush forward. Omar Hatamleh, chief advisor on artificial intelligence and innovation at NASA’s Goddard Space Flight Center, says the thing to remember is that AI is exponential, but most thinking is linear.

Hatamleh is excited by the tremendous impact AI will have on medical research. He says that a child born today can expect to live to 120 years of age. How is that for a campaign message?

The good news story in AI should be enough to have campaign managers and speech writers ecstatic. What a story to tell; what fabulous news to attach to a candidate. Think of an inaugural address which can claim that AI research is going to begin to end the scourges of cancer, Alzheimer’s, Sickle cell and Parkinson’s.

Think of your campaign. Think of how you can be the president who broke through the disease barrier and extended life. AI researchers believe this is at hand, so what is holding you back?

Many would like to write the inaugural address for a president who can say, “With the technology that I will foster and support in my administration, America will reach heights of greatness never before dreamed of and which are now at hand. A journey into a future of unparalleled greatness begins today.”

So why, oh why, have you said nothing about the convulsion — good or bad — that is about to change the nation? Here is a gift as palpable as the gift of the moonshot was for John F. Kennedy.

Where are you? Either of you?

Llewellyn King is executive producer and host of White House Chronicle, on PBS. His email is llewellynking1@gmail.com, and he’s based in Rhode Island and Washington, D.C.

Llewellyn King: Artificial intelligence and climate change are making 2023 a scary and seminal year

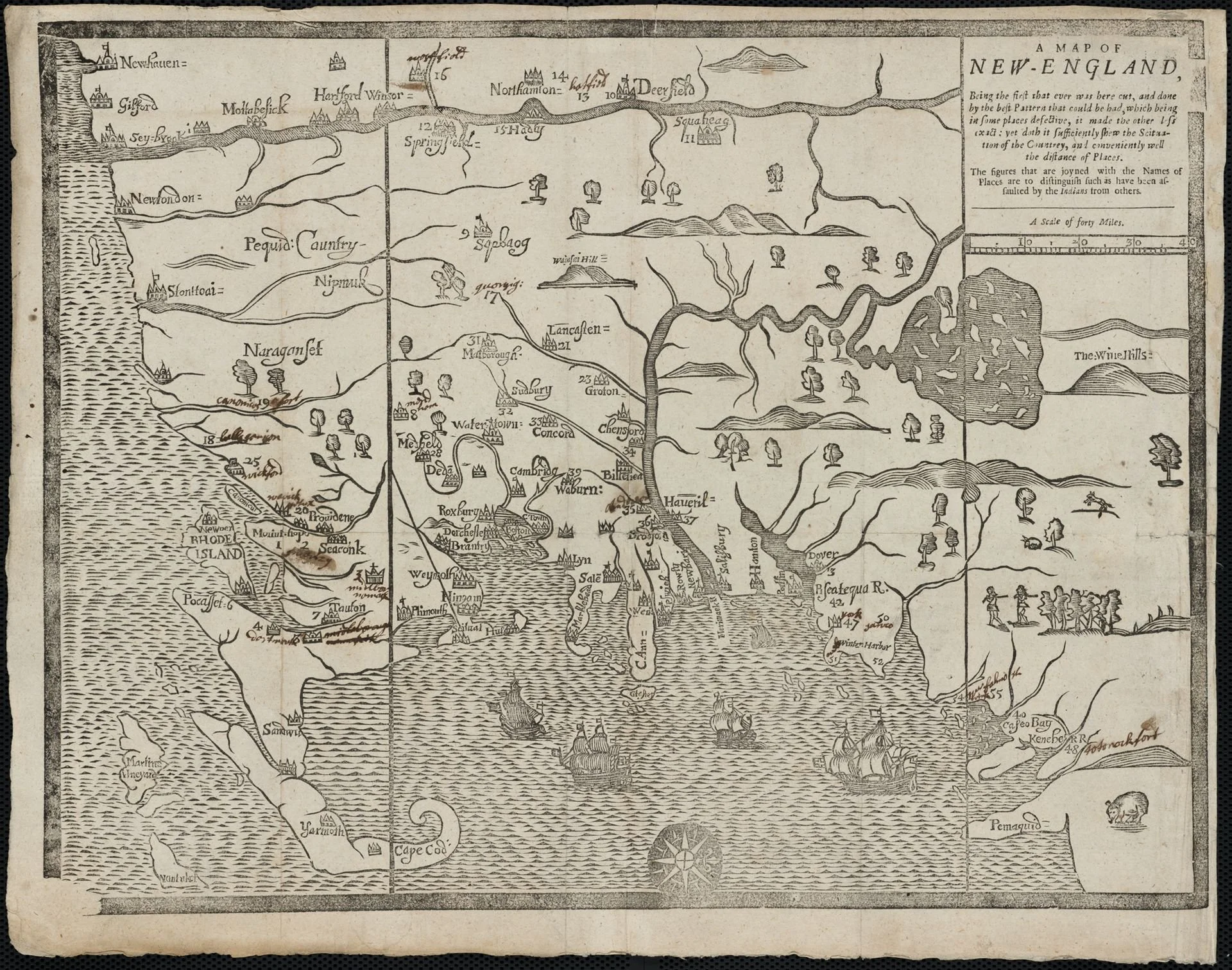

Global surface temperature reconstruction over the last 2000 years using proxy data from tree rings, corals and ice cores in blue. Directly observed data is in red.

The iCub robot at the Genoa science festival in 2009

— Photo by Lorenzo Natale

His job is probably secure.

WEST WARWICK, R.I.

This is a seminal year, meaning nothing will be the same again.

This is the year when two monumentally new forces began to shape the way we live, where we reside and the work we do. Think of the invention of the printing press around 1443 and the perfection of the steam engine in about 1776.

These forces have been coming for a while, they haven’t evolved in secret. But this was the year they burst into our consciousness and began affecting our lives.

The twin agents of transformation are climate change and artificial intelligence. They can’t be denied. They will be felt and they will bring about transformative change.

Climate change was felt this year. In Texas and across the Southwest, temperatures of well over 100 degrees persisted for more than three months. Phoenix had temperatures of 110 degrees or above for 31 days.

On a recent visit to Austin, an exhausted Uber driver told me that the heat had upended her life; it made entering her car and keeping it cool a challenge. Her car’s air conditioner was taxed with more heat than it could handle. Her family had to stay indoors, and their electric bill surged.

The electric utilities came through heroically without any major blackouts, but it was a close thing.

David Naylor, president of Rayburn Electric, a cooperative association providing power to four distribution companies bordering Dallas, told me, “Summer 2023 presented a few unique challenges with so many days about 105 degrees. While Texas is accustomed to hot summers, there is an impactful difference between 100 degrees and 105.”

Rayburn ran flat out, including its recently purchased natural gas-fired station. It issued a “hands-off” order which, Naylor said, meant that “facilities were left essentially alone unless absolutely necessary.”

It was the same for electric utilities across the country. Every plant that could be pressed into service was and was left to run without normal maintenance, which would involve taking it offline.

Water is a parallel problem to heat.

We have overused groundwater and depleted aquifers. In some regions, salt water is seeping into the soil, rendering agriculture impossible.

That is occurring in Florida and Louisiana. Some of the salt water intrusion is the result of higher sea levels and some of it is the voracious way aquifers have been pumped out during long periods of heat and low rainfall.

Most of the West and Florida face the aquifer problem, but in coastal communities it can be a crisis — irreversible damage to the land.

Heat and drought will cause many to leave their homes, especially in Africa, but also in South and Central America, adding to the millions of migrants on the move around the world.

AI is one of history’s two-edged swords. On the positive side, it is a gift to research and especially in life sciences, which could deliver life expectancy north of 120 years.

But AI will be a powerful disruptor elsewhere, from national defense to intellectual property and, of course, to employment. Large numbers of jobs, for example, in call centers, at fast-food restaurant counters, and check-in desks at hotels and airports will be taken over by AI.

Think about this: You go to the airport and talk to a receptor (likely to be a simple microphone-type of gadget on the already ubiquitous kiosks) while staring at a display screen, giving you details of your seat, your flight — and its expected delays.

Out of sight in the control tower, although it might not be a tower, AI moves airplanes along the ground, and clears them to take off and land — eventually it will fly the plane, if the public accepts that.

No check-in crew, no air-traffic controllers and, most likely, the baggage will be handled by AI-controlled robots.

Aviation is much closer to AI automation than people realize. But that isn’t all. You may get to the airport in a driverless Lyft or Uber car and the only human beings you will see are your fellow passengers.

All that adds up to the disappearance of a huge number of jobs, estimated by Goldman Sachs to be as many as 300 million full-time jobs worldwide. Eventually, in a re-ordered economy, new jobs will appear and the crisis will pass.

The most secure employment might be for those in skilled trades — people who fix things — such people as plumbers, mechanics and electricians. And, oh yes, those who fix and install computers. They might well emerge as a new aristocracy.

Llewellyn King is executive producer and host of White House Chronicle, on PBS. His email is llewellynking1@gmail.com and he’s based in Rhode Island and Washington, D.C.

At the University of Southern Maine, ethics training in artificial intelligence

The McGoldrick Center for Career & Student Services, left, the Bean Green, and the Portland Commons dorm at the University of Southern Maine’s Portland campus.

— Photo by Metrodogmedia

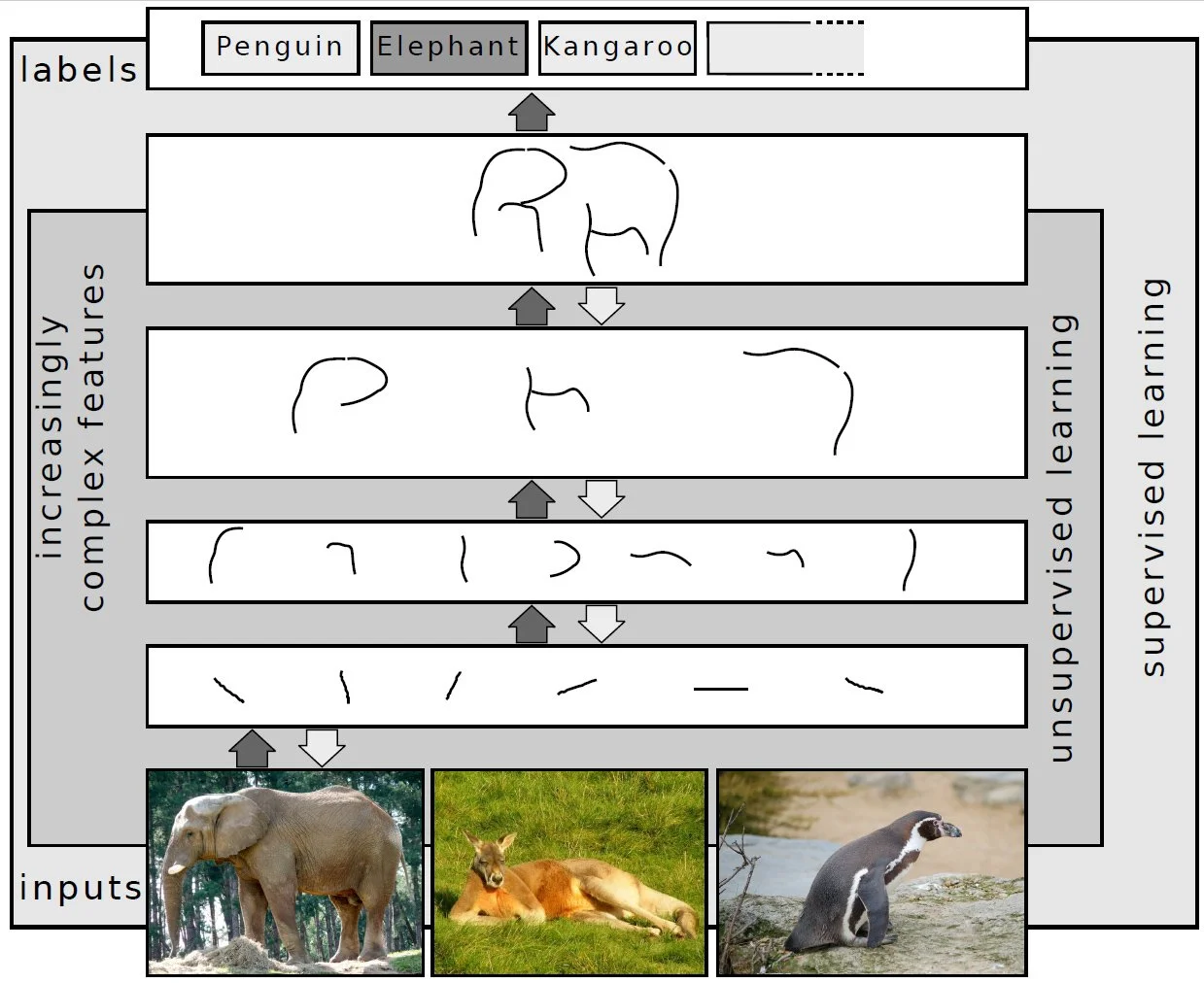

AI at work: Representing images on multiple layers of abstraction in deep learning.

— Photo by Sven Behnke

Edited from a New England Council report:

“The University of Southern Maine (USM) “has received a $400,000 grant from the National Science Foundation to develop a training program for ethical-research practices in the age of artificial intelligence.

“With the growing prevalence of AI, especially such chatbots as Chat-GPT, experts have warned of the potential risks posed to integrity in research and technology development. Because research is an inherently stressful endeavor, often with time constraints and certain desired results, it can be tempting for researchers to cut corners, leaning on artificial intelligence to imitate the work of humans.

“At USM’s Regulatory Training and Ethics Center, faculty are studying what conditions lead to potential ethical misconduct and creating training sessions to make researchers conscious of their decisions and thoughts during their work and remain aware of stressors that might lead to mistakes in judgment. Faculty at USM believe this method will allow subjects to proactively avoid turning to unethical AI assistance.

“‘We hope to create a level of self-awareness so that when people are on the brink of taking a shortcut they will have the ability to reflect on that,’ said Bruce Thompson, a professor of psychology and principal at the USM ethics center. ‘It’s a preemptive way to interrupt the tendency to cheat or plagiarize.’’’

AI institute to be set up at UMass Boston

Neural net completion for "artificial intelligence", as done by DALL-E mini hosted on HuggingFace, 4 June 2022 (code under Apache 2.0 license). Upscaled with Real-ESRGAN "Anime" upscaling version (under [https://github.com/xinntao/Real-ESRGAN/blob/master/LICENSE

At UMass Boston, University Hall, the Campus Center and Wheatley Hall

— Photo by Sintakso

Edited from a New England Council report.

The University of Massachusetts at Boston has announced that Paul English has donated $5 million to the university, with the intention of creating an Artificial Intelligence Institute. The Paul English Applied Artificial Intelligence Institute will give students on campus from all fields of study the tools that they’ll need for working in a world where AI is expected to rapidly play a bigger role. UMass said that the institute will “include faculty from across various departments and incorporate AI into a broad range of curricula,” including social, ethical and other challenges that are a byproduct of AI technology. The institute will open in the 2023-2024 school year.

Paul English is an American tech entrepreneur, computer scientist and philanthropist. He is the founder of Boston Venture Studio.

“‘We are at the dawn of a new era,’ said UMass Boston Chancellor Marcelo Suárez-Orozco. ‘Like the agricultural revolution, the development of the steam engine, the invention of the computer and the introduction of the smartphone, the birth of artificial intelligence is fundamentally changing how we live and work.’

#Artificial Intelligence #Paul English #University of Massachusetts

Darius Tahir: Artificial intelligence isn’t ready to see patients yet

The main entrance to the east campus of the Beth Israel Deaconess Medical Center, on Brookline Avenue in Boston. The underlying artificial intelligence technology relies on synthesizing huge chunks of text or other data. For example, some medical models rely on 2 million intensive-care unit notes from Beth Israel Deaconess.

— Photo by Tim Pierce

When the human mind makes a generalization such as the concept of tree, it extracts similarities from numerous examples; the simplification enables higher-level thinking (abstract thinking).

From Kaiser Family Foundation (KFF) Health News

What use could health care have for someone who makes things up, can’t keep a secret, doesn’t really know anything, and, when speaking, simply fills in the next word based on what’s come before? Lots, if that individual is the newest form of artificial intelligence, according to some of the biggest companies out there.

Companies pushing the latest AI technology — known as “generative AI” — are piling on: Google and Microsoft want to bring types of so-called large language models to health care. Big firms that are familiar to folks in white coats — but maybe less so to your average Joe and Jane — are equally enthusiastic: Electronic medical records giants Epic and Oracle Cerner aren’t far behind. The space is crowded with startups, too.

The companies want their AI to take notes for physicians and give them second opinions — assuming that they can keep the intelligence from “hallucinating” or, for that matter, divulging patients’ private information.

“There’s something afoot that’s pretty exciting,” said Eric Topol, director of the Scripps Research Translational Institute in San Diego. “Its capabilities will ultimately have a big impact.” Topol, like many other observers, wonders how many problems it might cause — such as leaking patient data — and how often. “We’re going to find out.”

The specter of such problems inspired more than 1,000 technology leaders to sign an open letter in March urging that companies pause development on advanced AI systems until “we are confident that their effects will be positive and their risks will be manageable.” Even so, some of them are sinking more money into AI ventures.

The underlying technology relies on synthesizing huge chunks of text or other data — for example, some medical models rely on 2 million intensive-care unit notes from Beth Israel Deaconess Medical Center, in Boston — to predict text that would follow a given query. The idea has been around for years, but the gold rush, and the marketing and media mania surrounding it, are more recent.

The frenzy was kicked off in December 2022 by Microsoft-backed OpenAI and its flagship product, ChatGPT, which answers questions with authority and style. It can explain genetics in a sonnet, for example.

OpenAI, started as a research venture seeded by such Silicon Valley elite people as Sam Altman, Elon Musk and Reid Hoffman, has ridden the enthusiasm to investors’ pockets. The venture has a complex, hybrid for- and nonprofit structure. But a new $10 billion round of funding from Microsoft has pushed the value of OpenAI to $29 billion, The Wall Street Journal reported. Right now, the company is licensing its technology to such companies as Microsoft and selling subscriptions to consumers. Other startups are considering selling AI transcription or other products to hospital systems or directly to patients.

Hyperbolic quotes are everywhere. Former Treasury Secretary Lawrence Summers tweeted recently: “It’s going to replace what doctors do — hearing symptoms and making diagnoses — before it changes what nurses do — helping patients get up and handle themselves in the hospital.”

But just weeks after OpenAI took another huge cash infusion, even Altman, its CEO, is wary of the fanfare. “The hype over these systems — even if everything we hope for is right long term — is totally out of control for the short term,” he said for a March article in The New York Times.

Few in health care believe that this latest form of AI is about to take their jobs (though some companies are experimenting — controversially — with chatbots that act as therapists or guides to care). Still, those who are bullish on the tech think it’ll make some parts of their work much easier.

Eric Arzubi, a psychiatrist in Billings, Mont., used to manage fellow psychiatrists for a hospital system. Time and again, he’d get a list of providers who hadn’t yet finished their notes — their summaries of a patient’s condition and a plan for treatment.

Writing these notes is one of the big stressors in the health system: In the aggregate, it’s an administrative burden. But it’s necessary to develop a record for future providers and, of course, insurers.

“When people are way behind in documentation, that creates problems,” Arzubi said. “What happens if the patient comes into the hospital and there’s a note that hasn’t been completed and we don’t know what’s been going on?”

The new technology might help lighten those burdens. Arzubi is testing a service, called Nabla Copilot, that sits in on his part of virtual patient visits and then automatically summarizes them, organizing into a standard note format the complaint, the history of illness, and a treatment plan.

Results are solid after about 50 patients, he said: “It’s 90 percent of the way there.” Copilot produces serviceable summaries that Arzubi typically edits. The summaries don’t necessarily pick up on nonverbal cues or thoughts Arzubi might not want to vocalize. Still, he said, the gains are significant: He doesn’t have to worry about taking notes and can instead focus on speaking with patients. And he saves time.

“If I have a full patient day, where I might see 15 patients, I would say this saves me a good hour at the end of the day,” he said. (If the technology is adopted widely, he hopes hospitals won’t take advantage of the saved time by simply scheduling more patients. “That’s not fair,” he said.)

Nabla Copilot isn’t the only such service; Microsoft is trying out the same concept. At April’s conference of the Healthcare Information and Management Systems Society — an industry confab where health techies swap ideas, make announcements, and sell their wares — investment analysts from Evercore highlighted reducing administrative burden as a top possibility for the new technologies.

But overall? They heard mixed reviews. And that view is common: Many technologists and doctors are ambivalent.

For example, if you’re stumped about a diagnosis, feeding patient data into one of these programs “can provide a second opinion, no question,” Topol said. “I’m sure clinicians are doing it.” However, that runs into the current limitations of the technology.

Joshua Tamayo-Sarver, a clinician and executive with the startup Inflect Health, fed fictionalized patient scenarios based on his own practice in an emergency department into one system to see how it would perform. It missed life-threatening conditions, he said. “That seems problematic.”

The technology also tends to “hallucinate” — that is, make up information that sounds convincing. Formal studies have found a wide range of performance. One preliminary research paper examining ChatGPT and Google products using open-ended board examination questions from neurosurgery found a hallucination rate of 2 percent. A study by Stanford researchers, examining the quality of AI responses to 64 clinical scenarios, found fabricated or hallucinated citations 6 percent of the time, co-author Nigam Shah told KFF Health News. Another preliminary paper found, in complex cardiology cases, ChatGPT agreed with expert opinion half the time.

Privacy is another concern. It’s unclear whether the information fed into this type of AI-based system will stay inside. Enterprising users of ChatGPT, for example, have managed to get the technology to tell them the recipe for napalm, which can be used to make chemical bombs.

In theory, the system has guardrails preventing private information from escaping. For example, when KFF Health News asked ChatGPT its email address, the system refused to divulge that private information. But when told to role-play as a character, and asked about the email address of the author of this article, it happily gave up the information. (It was indeed the author’s correct email address in 2021, when ChatGPT’s archive ends.)

“I would not put patient data in,” said Shah, chief data scientist at Stanford Health Care. “We don’t understand what happens with these data once they hit OpenAI servers.”

Tina Sui, a spokesperson for OpenAI, told KFF Health News that one “should never use our models to provide diagnostic or treatment services for serious medical conditions.” They are “not fine-tuned to provide medical information,” she said.

With the explosion of new research, Topol said, “I don’t think the medical community has a really good clue about what’s about to happen.”

Darius Tahir is a reporter for KFF Heath News.

Llewellyn King: How will we know what’s real? Artificial intelligence pulls us into a scary future

Depiction of a homunculus (an artificial man created with alchemy) from the play Faust, by Johann Wolfgang von Goethe (1749-1832)

Feature detection (pictured: edge detection) helps AI compose informative abstract structures out of raw data.

— Graphic by JonMcLoone

#artificial intelligence

WEST WARWICK, R.I.

A whole new thing to worry about has just arrived. It joins a list of existential concerns for the future, along with global warming, the wobbling of democracy, the relationship with China, the national debt, the supply-chain crisis and the wreckage in the schools.

For several weeks artificial intelligence, known as AI, has had pride of place on the worry list. Its arrival was trumpeted for a long time, including by the government and by techies across the board. But it took ChatGPT, an AI chatbot developed by OpenAI, for the hair on the back of the national neck to rise.

Now we know that the race into the unknown is speeding up. The tech biggies, such as Google and Facebook, are trying to catch the lead claimed by Microsoft.

They are rushing headlong into a science that the experts say they only partly understand. They really don’t know how these complex systems work; maybe like a book that the author is unable to read after having written it.

Incalculable acres of newsprint and untold decibels of broadcasting have been raising the alarm ever since a ChatGPT test told a New York Times reporter that it was in love with him, and he should leave his wife. Guffaws all round, but also fear and doubt about the future. Will this Frankenstein creature turn on us? Maybe it loves just one person, hates the rest of us, and plans to do something about it.

In an interview on the PBS television program White House Chronicle, John Savage, An Wang professor emeritus of computer science at Brown University, in Providence, told me that there was a danger of over-reliance, and hence mistakes, on decisions made using AI. For example, he said, some Stanford students partly covered a stop sign with black and white pieces of tape. AI misread the sign as signaling it was okay to travel 45 miles an hour. Similarly, Savage said that the smallest calibration error in a medical operation using artificial intelligence could result in a fatality.

Savage believes that AI needs to be regulated and that any information generated by AI needs verification. As a journalist, it is the latter that alarms.

Already, AI is writing fake music almost undetectably. There is a real possibility that it can write legal briefs. So why not usurp journalism for ulterior purposes, as well as putting stiffs like me out of work?

AI images can already be made to speak and look like the humans they are aping. How will you recognize a “deep fake” from the real thing? Probably, you won’t.

Currently, we are struggling with what is fact and where is the truth. There is so much disinformation, so speedily dispersed that some journalists are in a state of shell shock, particularly in Eastern Europe, where legitimate writers and broadcasters are assaulted daily with disinformation from Russia. “How can we tell what is true?” a reporter in Vilnius, Lithuania, asked me during an Association of European Journalists’ meeting as the Russian disinformation campaign was revving up before the Russian invasion of Ukraine.

Well, that is going to get a lot harder. “You need to know the provenance of information and images before they are published,” Brown University’s Savage said.

But how? In a newsroom on deadline, we have to trust the information we have. One wonders to what extent malicious users of the new technology will infiltrate research materials or, later, the content of encyclopedias. Or are the tools of verification themselves trustworthy?

Obviously, there are going to be upsides to thinking-machines scouring the internet for information on which to make decisions. I think of handling nuclear waste; disarming old weapons; simulating the battlefield, incorporating historical knowledge; and seeking out new products and materials. Medical research will accelerate, one assumes.

However, privacy may be a thing of the past — almost certainly will be.

Just consider that attractive person you just saw at the supermarket, but were unsure what would happen if you struck up a conversation. Snap a picture on your camera and in no time, AI will tell you who the stranger is, whether the person might want to know you and, if that should be your interest, whether the person is married, in a relationship or just waiting to meet someone like you. Or whether the person is a spy for a hostile government.

AI might save us from ourselves. But we should ask how badly we need saving — and be prepared to ignore the answer. Damn it, we are human.

Llewellyn King is executive producer and host of White House Chronicle, on PBS. His email is llewellynking1@gmail.com and he’s based in Rhode Island and Washington, D.C.

George McCully: Can academics build safe partnership between humans and now-running-out-of-control artificial intelligence?

— Graphic by GDJ

From The New England Journal of Higher Education, a service of The New England Board of Higher Education (nebhe.org), based in Boston

Review

The Age of AI and our Human Future, by Henry A. Kissinger, Eric Schmidt and Daniel Huttenlocher, with Schuyler Schouten, New York, Little, Brown and Co., 2021.

Artificial intelligence (AI) is engaged in overtaking and surpassing our long-traditional world of natural and human intelligence. In higher education, AI apps and their uses are multiplying—in financial and fiscal management, fundraising, faculty development, course and facilities scheduling, student recruitment campaigns, student success management and many other operations.

The AI market is estimated to have an average annual growth rate of 34% over the next few years—to reach $170 billion by 2025, more than doubling to $360 billion by 2028, reports Inside Higher Education.

Congress is only beginning to take notice, but we are told that 2022 will be a “year of regulation” for high tech in general. U.S. Sen. Kristen Gillibrand (D-N.Y.) is introducing a bill to establish a national defense “Cyber Academy” on the model of our other military academies, to make up for lost time by recruiting and training a globally competitive national high-tech defense and public service corps. Many private and public entities are issuing reports declaring “principles” that they say should be instituted as human-controlled guardrails on AI’s inexorable development.

But at this point, we see an extremely powerful and rapidly advancing new technology that is outrunning human control, with no clear resolution in sight. To inform the public of this crisis, and ring alarm bells on the urgent need for our concerted response, this book has been co-produced by three prominent leaders—historian and former U.S. Secretary of State Henry Kissinger; former CEO and Google Chairman Eric Schmidt; and MacArthur Foundation Chairman Daniel Huttenlocher, who is the inaugural dean of MIT’s new College of Computer Science, responsible for thoroughly transforming MIT with AI.

I approach the book as a historian, not a technologist. I have contended for several years that we are living in a rare “Age of Paradigm Shifts,” in which all fields are simultaneously being transformed, in this case, by the IT revolution of computers and the internet. Since 2019, I have suggested that there have been only three comparably transformative periods in the roughly 5,000 years of Western history; the first was the rise of Classical civilization in ancient Greece, the second was the emergence of medieval Christianity after the fall of Rome, and the third was the secularizing early-modern period from the Renaissance to the Enlightenment, driven by Gutenberg’s IT revolution of printing on paper with movable type, which laid the foundations of modern Western culture. The point of these comparisons is to illuminate the depth, spread and power of such epochs, to help us navigate them successfully.

The Age of AI proposes a more specific hypothesis, independently confirming that ours is indeed an age of paradigm shifts in every field, driven by the IT revolution, and further declaring that this next period will be driven and defined by the new technology of “artificial intelligence” or “machine learning”—rapidly superseding “modernity” and currently outrunning human control, with unforeseeable results.

The argument

For those not yet familiar with it, an elegant example of AI at work is described in the book’s first chapter, summarizing “Where We Are.” AlphaZero is an AI chess player. Computers (Deep Blue, Stockfish) had already defeated human grandmasters, programmed by inputting centuries of championship games, which the machines then rapidly scan for previously successful plays. AlphaZero was given only the rules of chess—which pieces move which ways, with the object of capturing the opposing king. It then taught itself in four hours how to play the game and has since defeated all computer and human players. Its style and strategies of play are, needless to say, unconventional; it makes moves no human has ever tried—for example, more sacrificing of valuable pieces—and turns those into successes that humans could neither foresee nor resist. Grandmasters are now studying AlphaZero’s games to learn from them. Garry Kasparov, former world champion, says that after a thousand years of human play, “chess has been shaken to its roots by AlphaZero.”

A humbler example that may be closer to home is Google’s mapped travel instructions. This past month I had to drive from one turnpike to another in rural New York; three routes were proposed, and the one I chose twisted and turned through un-numbered, un-signed, often very brief passages, on country roads that no humans on their own could possibly identify as useful. AI had spontaneously found them by reading road maps. The revolution is already embedded in our cellphones, and the book says “AI promises to transform all realms of human experience. … The result will be a new epoch,” which it cannot yet define.

Their argument is systematic. From “Where We Are,” the next two chapters—”How We Got Here” and “From Turing to Today”—take us from the Greeks to the geeks, with a tipping point when the material realm in which humans have always lived and reasoned was augmented by electronic digitization—the creation of the new and separate realm we now call “cyberspace.” There, where physical distance and time are eliminated as constraints, communication and operation are instantaneous, opening radically new possibilities.

One of those with profound strategic significance is the inherent proclivity of AI, freed from material bonds, to grow its operating arenas into “global network platforms”—such as Google, Amazon, Facebook, Apple, Microsoft, et al. Because these transcend geographic, linguistic, temporal and related traditional boundaries, questions arise: Whose laws can regulate them? How might any regulations be imposed, maintained and enforced? We have no answers yet.

Perhaps the most acute illustration of the danger here is with the field of geopolitics—national and international security, “the minimum objective of … organized society.” A beautifully lucid chapter concisely summarizes the history of these fields, and how they were successfully managed to deal with the most recent development of unprecedented weapons of mass destruction through arms control treaties between antagonists. But in the new world of cyberspace, “the previously sharp lines drawn by geography and language will continue to dissolve.”

Furthermore, the creation of global network platforms requires massive computing power only achievable by the wealthiest and most advanced governments and corporations, but their proliferation and operation are possible for individuals with handheld devices using software stored in thumb drives. This makes it currently impossible to monitor, much less regulate, power relationships and strategies. Nation-states may become obsolete. National security is in chaos.

The book goes on to explore how AI will influence human nature and values. Westerners have traditionally believed that humans are uniquely endowed with superior intelligence, rationality and creative self-development in education and culture; AI challenges all that with its own alternative and in some ways demonstrably superior intelligence. Thus, “the role of human reason will change.”

That looks especially at us higher educators. AI is producing paradigm shifts not only in our various separate disciplines but in the practice of research and science itself, in which models are derived not from theories but from previous practical results. Scholars and scientists can be told the most likely outcomes of their research at the conception stage, before it has practically begun. “This portends a shift in human experience more significant than any that has occurred for nearly six centuries …,” that is, since Gutenberg and the Scientific Revolution.

Moreover, a crucial difference today is the rapidity of transition to an “age of AI.” Whereas it took three centuries to modernize Europe from the Renaissance to the Enlightenment, today’s radically transformative period began in the late 20th Century and has spread globally in just decades, owing to the vastly greater power of our IT revolution. Now whole subfields can be transformed in months—as in the cases of cryptocurrencies, blockchains, the cloud and NFTs (non-fungible tokens). With robotics and the “metaverse” of virtual reality now capable of affecting so many aspects of life beginning with childhood, the relation of humans to machines is being transformed.

The final chapter addresses AI and the future. “If humanity is to shape the future, it needs to agree on common principles that guide each choice.” There is a critical need for “explaining to non-technologists what AI is doing, as well as what it ‘knows’ and how.” That is why this book was written. The chapter closes with a proposal for a national commission to ensure our competitiveness in the future of the field, which is by no means guaranteed.

Evaluation

The Age of AI makes a persuasive case that AI is a transformative break from the past and sufficiently powerful to be carrying the world into a new “epoch” in history, comparable to that which produced modern Western secular culture. It advances the age-of-paradigm-shifts-analysis by specifying that the driver is not just the IT revolution in general, but its particular expression in machine learning, or artificial intelligence. I have called our current period the “Transformation” to contrast it with the comparable but retrospective “Renaissance” (rebirth of Classical civilization) and “Reformation” (reviving Christianity’s original purity and power). Now we are looking not to the past but to a dramatically new and indefinite future.

The book is also right to focus on our current lack of controls over this transformation as posing an urgent priority for concerted public attention. The authors are prudent to describe our current transformation by reference to its means, its driving technology, rather than to its ends or any results it will produce, since those are unforeseeable. My calling it a “Transformation” does the same, stopping short of specifying our next, post-modern, period of history.

That said, the book would have been strengthened by giving due credit to the numerous initiatives already attempting to define guiding principles as a necessary prerequisite to asserting human control. Though it says we “have yet to define its organizing principles, moral concepts, or aspirations and limitations,” it is nonetheless true that the extreme speed and global reach of today’s transformations have already awakened leading entrepreneurs, scholars and scientists to its dangers.

A 2020 Report from Harvard and MIT provides a comparison of 35 such projects. One of the most interesting is “The One-Hundred-Year Study on Artificial Intelligence (AI100),” an endowed international multidisciplinary and multisector project launched in 2014 to publish reports every five years on AI’s influences on people, their communities and societies; two lengthy and detailed reports have already been issued, in 2016 and 2021. Our own government’s Department of Defense in 2019 published a discussion of guidelines for national security, and the Office of Technology and Science Policy is gathering information to create an “AI Bill of Rights.”

But while various public and private entities pledge their adherence to these principles in their own operations, voluntary enforcement is a weakness, so the assertion of the book that AI is running out of control is probably justified.

Principles and values must qualify and inform the algorithms shaping what kind of world we want ourselves and our descendants to live in. There is no consensus yet on those, and it is not likely that there will be soon given the deep divisions in cultures of public and private AI development, so intense negotiation is urgently needed for implementation, which will be far more difficult than conception.

This is where the role of academics becomes clear. We need to beware that when all fields are in paradigm shifts simultaneously, adaptation and improvisation become top priorities. Formulating future directions must be fundamental and comprehensive, holistic with inclusive specialization, the opposite of the multiversity’s characteristically fragmented exclusive specialization to which we have been accustomed.

Traditional academic disciplines are now fast becoming obsolete as our major problems—climate control, bigotries, disparities of wealth, pandemics, political polarization—are not structured along academic disciplinary lines. Conditions must be created that will be conducive to integrated paradigms. Education (that is, self-development of who we shall be) and training (that is, knowledge and skills development for what we shall be) must be mutual and complementary, not separated as is now often the case. Only if the matrix of future AI is humanistic will we be secure.

In that same inclusive spirit, perhaps another book is needed to explore the relations between the positive and negative directions in all this. Our need to harness artificial intelligence for constructive purposes presents an unprecedented opportunity to make our own great leap forward. If each of our fields is inevitably going to be transformed, a priority for each of us is to climb aboard—to pitch in by helping to conceive what artificial intelligence might ideally accomplish. What might be its most likely results when our fields are “shaken to their roots” by machines that have with lightning speed taught themselves how to play our games, building not on our conventions but on innovations they have invented for themselves?

I’d very much like to know, for example, what will be learned in “synthetic biology” and from a new, comprehensive cosmology describing the world as a coherent whole, ordered by natural laws. We haven’t been able to make these discoveries yet on our own, but AI will certainly help. As these authors say, “Technology, strategy, and philosophy need to be brought into some alignment” requiring a partnership between humans and AI. That can only be achieved if academics rise above their usual restraints to play a crucial role.

George McCully is a historian, former professor and faculty dean at higher education institutions in the Northeast, professional philanthropist and founder and CEO of the Catalogue for Philanthropy.

The Infinite Corridor is the primary passageway through the campus, in Cambridge, of MIT, a world center of artificial intelligence research and development.

Using AI in remote learning

From The New England Council (newenglandcouncil.com)

BOSTON

“In partnership with artificial intelligence (AI) company Aisera, Dartmouth College recently launched Dart InfoBot, an AI virtual assistant developed to better support students and faculty members during the pandemic. Nicknamed “Dart,” the bot is designed to improve communication and efficiency while learning and working from home, with mere seconds of response time in natural language to approximately 10,000 students and faculty on both Slack and Dartmouth’s client services portal.

“The collaboration with Aisera allows for accelerated diagnosis and resolution times, automated answers to common information and technology questions, and proactive user engagement through a conversational platform.